Query Your Toaster

November 15, 2007

People have asked for Qt’s XQuery & XPath support to not be locked to a particular tree backend such as QDom, but to be able to work on arbitrary backends.

Any decent implementation(such as XQilla or Saxon) provide that nowadays in someway or another, but I’d say Patternist’s approach is novel, with its own share of advantages. So let me introduce what Qt’s snapshot carries.

<ul>

{

for $file in $exampleDirectory//file[@suffix = "cpp"]

order by xs:integer($file/@size)

return <li>

{string($file/@fileName)}, size: {string($file/@size)}

</li>

}

</ul>

and the query itself was set up with:

QXmlQuery query;FileTree fileTree(query.namePool()); query.setQuery(&file, QUrl::fromLocalFile(file.fileName()));query.bindVariable("exampleDirectory", fileTree.nodeFor(QLibraryInfo::location(QLibraryInfo::ExamplesPath)));if(!query.isValid())return InvalidQuery;QFile out;out.open(stdout, QIODevice::WriteOnly);query.serialize(&out);

These two snippets are taken from the example found in examples/xmlpatterns/filetree/, which with about 250 lines of code, has virtualized the file system into an XML document.

In other words, with the tree backend FileTree that the example has, it’s possible to query the file system, without converting it to a textual XML document or anything like that.

And that’s what the query does: it finds all the .cpp files found on any level in Qt’s example directory, and generate a HTML list, ordered by their file size. Maybe generating a view for image files in a folder would have been a tad more useful.

The usual approach to this is an abstract interface/class for dealing with nodes, which brings disadvantages such as heap allocations and that one need to allocate such structures and hence the possibility to affect the implementation of what one is going to query.

But along time ago Patternist was rewritten to use Qt’s items & models pattern, which means any existing structure can be queried, without touching it. That’s what the FileTree class does, it subclasses QSimpleXmlNodeModel and handles out QXmlNodeModelIndex instances, which are light, stack allocate values.

This combined with that the engine tries to evaluate in a streamed and lazy manner to the degree that it thinks it can, means fairly efficient solutions should be doable.

So what does this mean? It means that if you would like to, you can relatively cheaply be able to use the XQuery language on top of your custom data structure, as long as it is somewhat hierarchical.

For instance, a backend could bridge the QObject tree, such that the XQuery language could be used to find Human Interface Guideline-violations within widgets; molecular patterns in a chemistry application can concisely be identified with a two or three liner XPath expression, and the documentation carries on with a couple of other examples. No need to convert QWidgets to nodes, or force a compact representation to sub-class an abstract interface.

A to me intriguing case would be a web robot that models the links between different pages as a graph, and finds invalid documents & broken links using the doc-available() function, or reported URIs that a website shouldn’t be linking to(such as a public site referencing intranet pages).

Our API freeze is approaching. If something is needed but missing, let me know.

Integrating Compiler Messages

October 23, 2007

Attention to details is ok, but compiler messages has historically not received it. Here’s an example of GCC’s output:

qt/src/xml/query/expr/qcastingplatform.cpp: In member function 'bool CastingPlatform::prepareCasting():

qt/src/xml/query/expr/qcastas.cpp:117: instantiated from here

qt/src/xml/query/expr/qcastingplatform.cpp:85: error: no matching function for call to 'locateCaster(int)'

qt/src/xml/query/expr/qcastingplatform.cpp:93: note: candidates are: locateCaster(const bool&)

Typically compiler messages have been subject to crude printf approaches and dignity has been left out: localization, translation, consistency in quoting style (for instance), adapting language to users (e.g, to not phrase things preferred by compiler engineers), good English, and just generally looking sensible.

To solve that it requires quite some work, and that’s probably the explanation to why it often is left out. To have line numbers, error codes, names of functions, and whatever available and flowing through the system requires quite some plumbing and room in the design.

Another thing is that nowadays we really should expect that compiler messages within IDEs or other graphical applications should be sanely typeset. If not, we’ve lost ourselves in all this UNIX stuff. Keywords and important phrases should be italic, emphasized, colorized depending on the GUI style.

For shuffling compiler messages around it is customary to pass a set of properties: a URI, line number, column number, a descriptive string, and possibly an error code. Apart from that it falls short reaching the goals outlined in this text, it encounters a problem which I think is illustrated in the above example with GCC. What does one do if the message involves several locations?

Even if a message involves several locations, it is still one message and should be treated so, and presented as so. The approach of using a struct with properties falls short here, and chops the message into as many parts as it has locations.

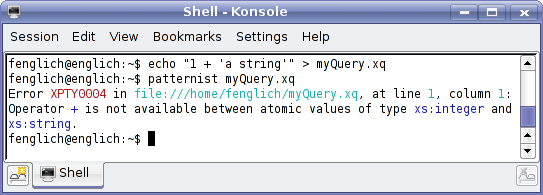

For Patternist I wanted to make an attempt at improving messages. So far it is an improvement at least. For instance, for this message that the command line tool patternist outputs:

the installed QAbstractMessageHandler was passed a QSourceLocation and a message which read:

<p>Operator <span class='XQuery-keyword'>+</span> is not available between atomic values of type <span class='XQuery-type'>xs:integer</span> and <span class='XQuery-type'>xs:string</span>.</p>

It was subsequently converted to local encoding and formatted with ECMA-48 color codes. (The format is not spec’d yet, it will probably be XHTML with specified class ids.)

While using markup for the message is a big improvement, it opens the door for formatting and all, this API still has the problem of dealing with multiple locations.

What is the solution to that?

Striking the balance between programmatic interpretation(such that for instance source document navigation is doable) and that the message reads naturally as one coherent unit is to… maybe duplicate the information, but each time tailored for a particular consumer?

<p xmlns:l="http://example.com/">In my <l:location href="myDocument.xml" line="57" column="3">myQuery.xq at line 57, column 3</l:location>, function <span class="XQuery-keyword">fn:doc()</span> failed with code <span class="XQuery-keyword">XPTY0004</span>: the file <l:location href="myDocument.xml" line="93" column="9">myDocument.xml failed to parse at line 93, column 9</l:location>: unexpected token <span class="XQuery-keyword">&</span>.</p>

This is complicated by that language strings cannot be concatenated together since that prevents translation. But I think the above paragraph is possible to implement. As above, the message reads coherently, but still allows programmatic extraction. A language string and formatted data sits in opposite corners of extremity, and maybe markup is the balance between them.

Would this give good compiler messages and allow slick IDE integration? If not, what would?

XML APIs

November 18, 2006

How to design APIs for XML is debated daily, and has been done so for long. For too long. Now ages ago, companies formed at W3C to design the DOM, using language neutrality and document editing with load/save persistence as goals(it seems, and some says). But some needed other things, such as a streamed, less verbose approach and hence SAX was brought to use. Others found SAX cumbersome to use, and StAX was deployed. And so on, and so on.

One urge I have is to cry out: why can we never design a sensible API? But that reaction wouldn’t be justified. Software is the implementation of ideas. When the software has to change, it’s the reaction stemming from that the ideas(the requirements) changed.

Afterall, SAX works splendidly for some scenarios. I don’t expect one tool for all scenarios, because XML is used in too varied ways. But still, even though one can expect tools to become obsolete and that one size doesn’t fit all, the current situation is more worse than what is reasonable.

In Qt, the dilemma the XML community has is present as well, painfully. The QtXml module provides an (in my opinion poor) implementation of DOM, and SAX. Something needs to be added in order to make XML practical to work with using Qt. Some of the ideas I’ve heard are by the book: add StAX as a streamed-but-easy-to-use API, and a XOM-like API for doing in-memory representation. The latter would be an API that doesn’t carry the legacy of XML’s evolution(the addition of namespaces, for instance) and in general do what an XML API is supposed to do: be an interface for the XML and therefore take care of all the pesky spec details, which XOM does in an excellent way.

If Trolltech added StAX and a XOM-like API to Qt no one could blame them. Other do it and it is the politically correct alternative at this point of our civilization(just as DOM once was). But I start suspecting that it’s the wrong direction. That the step of learning a lesson of adding yet another API could be skipped, in favour of jumping directly to what would follow.

Let’s look at what XML is:

- It is a medium, a text format for exchanging data, specified in XML 1.0 and XML namespaces. XML is absolutely terrific at this. The IT’s history is tormented with interoperability problems such as encoding issues. XML solves all that in one go. It abstracts away from primitive details, and provides a platform. This is why XML is popular.

- A set of concepts to express ideas. This is all that about elements and nodes formed in a hierarchial structure(that from a reader’s standpoint can be difficult to distinguish from the text representation, since we humans instantly see the logical structure when looking at an XML document). Exactly what that is, is not so obvious. The different appproaches are often referred to collectively as data models, and there are plenty of them: the XPath 2.0 Data Model, the XML Information Set, the PSVI infoset extension, the DOM(that it stands for Document Object Model is a hint), and the list goes on. These are all different ideas to what a sequence of characters arranged to be valid XML, actually means.

That one can view XML as consisting of these two parts reveals a bit about how XML has evolved. First XML 1.0 arrived, taking care of syntax details. Later on, this plethora of data models arrived to formally define what XML 1.0 informally specified. Understandably many wants to make the XML specification also specify the data model. The question is of course which one to choose, and what the effects are of that.

But the list of data models doesn’t stop with the above. Those are just examples of standardized models. I believe that one data model exist for each XML scenario.

When a word processor reads in a document with the DOM, the actual data model consists of words, paragaphs, titles, sections and so on. The DOM represents that poorly, but apparently acceptably well. Similarly, when a chemistry program reads in a molecule, its data model consists of atoms.

That XML is used for different things can be seen in the APIs being created. SAX is popular because it easily allows a specialized data model to be created, by that the programmer receive the XML on a high level and from that builds the perfect data structure. DOM allows sub-classing of node classes by using factories and attaching user data to nodes, in order to make the DOM instance closer to the user’s data model.

XML is not wanted. Communication is a necessary evil, and therefore XML is as well. If programs could just mindwarp their ideas, molecules and word processor documents, to another program they would, instead of dwelving into the perils with communicating through XML.

I believe this is a good background when tackling the big topic of providing tools for working with XML. It’s not questions like “How do we design an API that avoid the namespace problems the DOM exposes?” It starts at a higher level:

How do we allow the user to in the easiest and most efficient way go from XML to the data model of choice?

Ideally, the user shouldn’t care about details such as namespaces and parent/child relationships. If the API has to push that onto the user, it’s an necessary evil. It’s again about not getting far away from the ideal data model. The idea is in general already practiced when it comes to the most primitive part: serialization. It’s widely agreed that a specialized mechanism(a class) should take care of the serialization step.

Let’s try to apply this buzzword talk to Patternist and Qt. A QAbstractItemModel is typically used to represent the data, since the data is practically separated from its presentation, with the model/view concept. The user wants to read an XML file, and produce an QAbstractItemModel instance.

Patternist, just as Saxon, is designed to be able to send its output to different destinations. It’s not constrained to produce text(XML) or SAX events or building a DOM tree, it just uses a callback. And that callback could just build an item model. It should be possible to write that glue code such that it works for arbitrary models.[1] With such a mechanism, one would only have to write an XQuery query or XSL-T stylesheet that defines a mapping between the XML and the item model, in order to do up and down conversions.

Using Patternist to directly creating item models might not be the way to go. But I do think one should concentrate on what the user wants to achieve instead of trying to fix the current tools(perhaps it doesn’t matter that the hammer is broken, because in either case a screw driver should be used). And amongst what the user wants to do, I believe converting between XML and the data model of choice is a very common scenario.

1.

In general, it all seems interesting to write “interactive” output receivers and trees with Qt. One would be able to write queries/stylesheets that generate widgets, write queries over the file system or QObject tree, etc. But that’s another topic.

Finding Invalid Static Casts

August 24, 2006

In Patternist, the XSL-T, XQuery and XPath framework, casting between class hierarchies is common, as in most other compiler software. For that reason, as discussed with Vincent, I added a class for simplifying this and making it safer. The doxygen documentation explains it all.

For me it brought an unexpected side effect: the asserts performing dynamic casts found two cases where the code performed invalid static casts. Previously, the code compiled, the code worked and tests passed. I knew the code paths were run. Nevertheless, the static casts were invalid because the values being cast were not instances of the target type.

Why did the casts work then? Hard to tell precisely, but it involved factors such as what compiler that was used, hardware platform, compiler switches and the layout of the classes. The reason being that the code the compiler generated for accessing the class instances happened to work even though the instances weren’t of the types the compiler was told.

Hence, having these dynamic_cast asserts are close to invaluable since they found two bugs that would first appear on another setup, which I wouldn’t be able to reproduce. Bugs, that probably first would be encountered in production code.

On large projects I think it is wise to use a mechanism such as CppCastingHelper. I wonder how many static source code analyzers that verifies static casts are valid.

Qt + Flex = Problems

July 7, 2006

GNU Flex, “the fast lexical analyser generator“, is a handy tool for writing compiler-related software, as those who have knows. It saves tremendous amounts of code, allows tokenization rules to be expressed in a concise way, and the generated tokenizer is fast.

For that reason, it is a pity Flex cannot be used in serious projects. Because it doesn’t support Unicode. Read the rest of this entry »

Doxygen Galore

June 21, 2006

During the weekend, Vincent and I engaged in a Doxygen-galore by enabling, among many other settings, CALL_GRAPH and CALLER_GRAPH. It is graphically impressive due to the size of Patternist(although the screens Zack posted were pretty neat too).

This is the inheritance graph for FunctionCall, the base class for all function call implementations for XPath/XQuery. It is perhaps better called “the spider”.

The same graph for the Iterator class reminds a bit of a ball gown, when viewed from the side.

With TEMPLATE_RELATIONS set, the template instantiation is shown, as in Vincent’s recent work on ComparisonPlatform.

I find CALL_GRAPH and CALLER_GRAPH useful when learning code, or revisiting a large piece of code written long time ago. Iterator::cardinality() or Expression::evaluateEBV() demonstrates it nicely — one can simply surf the control flow graph. Especially, when combined with enabling the source browser, as seen in for example ValueComparison.cpp. However, it is a pity Doxygen’s control flow analysis doesn’t understand template instantiations, because the vast majority of Patternist’s objects are handled with smart pointers. For example, the call/caller graphs doesn’t generate vertexes for things like m_object->myFunction() if m_object is of a smart pointer-type.

Another practical thing, which I had no idea Doxygen was capable of, is building graphs describing file inclusions. Quite practical if one is planning a refactoring and needs to find out what needs what, in order to avoid circular inclusions, and so on.

The output of Doxygen, even without the hefty settings, is quite technical and information intensive, compared to the output of qdoc, as seen in Qt’s Assistant. An arbitrary Doxygen page is overloaded with statistics, links and what not, while Assistant is more concise — or lacking information, if one likes.

I think Doxygen’s style is useful for developers trying to tame large projects, because of its focus on implementation aspects such as where a function is defined, supplying header files, linking to the extent technically possible, and so on. However, for someone using an API as a user, most of the information provided isn’t that useful, but contributes instead to information overload. Most of this can be turned off, but I still say Assistant is a notch better for communicating an API to users, as opposed to its developers.